Jun 23, 2024

Top Essential SQL Functions in Databricks for 2024: A Comprehensive Guide

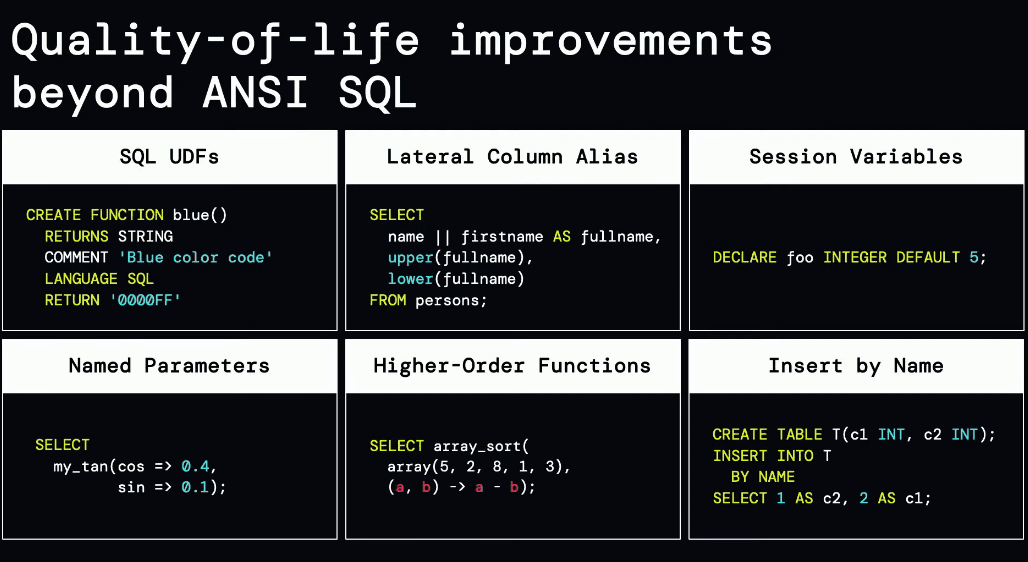

We delved into features of Databricks SQL which can provide Quality-of-life improvements, each designed to streamline your workflows and enhance data processing efficiency. From handling semi-structured data with ease to leveraging powerful machine learning capabilities directly in SQL, these features are set to revolutionize how you interact with your data. Let's recap the key insights and practical applications we've covered.

1. Variant Data Type in Databricks

What is the Variant Data Type?

The Variant data type in Databricks provides a unified way to store and query semi-structured data. It allows you to work with different data formats such as JSON, Parquet, and Avro without having to convert them to a specific schema upfront.

Why Use Variant Data Type?

Flexibility: Handles diverse data formats seamlessly.

Simplified Schema Management: No need for upfront schema definition.

Enhanced Query Performance: Optimized for querying semi-structured data.

Example Use Cases with Code:

1. Storing JSON Data:

2. Querying Variant Data:

Output:

How Variant Data Type Standardizes Semi-Structured Data

The Variant data type simplifies the process of managing semi-structured data by providing a consistent way to store and query different formats.

It eliminates the need for complex transformations and schema management, making your data pipelines more efficient and easier to maintain.

Results

Using the Variant data type leads to:

Faster Query Performance: Querying JSON as Variant is 8x faster than querying JSON as String format.

Reduced Complexity: Simplifies data ingestion and transformation processes.

Enhanced Flexibility: Easily integrates diverse data formats without extensive preprocessing.

2. Named Parameters in Databricks SQL

What are Named Parameters?

Named parameters in Databricks SQL allow you to assign specific names to the parameters in your SQL functions. This feature makes your SQL queries more readable and easier to maintain by clearly indicating the purpose of each parameter.

Why Use Named Parameters?

Clarity: Improves readability by clearly identifying the purpose of each parameter.

Maintainability: Simplifies query updates and modifications.

Flexibility: Allows you to specify parameters in any order, enhancing ease of use.

Example Use Cases:

Updating Records Efficiently

Imagine you are managing a sales database and need to update the discount rate for a specific product based on certain conditions. Named parameters make this task straightforward and clear.

Code Explanation:

Table Creation:

This command creates a table named

saleswith four columns:sale_id,product,amount, anddiscount.

Inserting Sample Data:

Here, two rows of sample data are inserted into the

salestable.

Updating Records with

Named Parameters:This SQL command updates the

discountcolumn in thesalestable. It uses named parameters (:product_name,:new_discount,:sale_id) to make the query more readable and maintainable.The

CASEstatement checks if theproductmatches the:product_nameparameter and sets the discount to:new_discountif true; otherwise, it retains the existing discount.

Setting and Using

Named Parameters:Named parameters

@sale_id,@product_name, and@new_discountare set with specific values.The

EXECUTE IMMEDIATEstatement executes theUPDATEcommand using the named parameters, updating the discount for the specified sale.

Output:

How Named Parameters Enhance SQL Queries

Named parameters significantly enhance the readability and maintainability of SQL queries by allowing you to specify parameters with clear, descriptive names. This reduces the potential for errors and makes it easier for others to understand your queries.

Key Benefits:

Improved Readability: Queries are easier to understand with descriptive parameter names.

Simplified Debugging: Easier to identify and fix issues with clear parameter naming.

Flexible Parameter Order: Specify parameters in any order, making query writing more intuitive.

3. INSERT BY NAME in Databricks SQL

What is INSERT BY NAME?

INSERT BY NAME allows you to insert data into a table without worrying about the exact order of columns in your SELECT statement. This feature automatically matches the columns from your SELECT statement to the target table columns by name, making the process more intuitive and less error-prone.

Before the INSERT BY NAME feature was introduced, inserting data into tables with many columns required meticulous attention to the order of columns. This could be cumbersome and error-prone, especially with wide tables. Let's look at a comparison between the traditional method and the new method using INSERT BY NAME.

Solving Daily Problems with INSERT BY NAME

Consider a scenario where you are working with a large and complex data pipeline, often dealing with tables having dozens of columns. Here’s how INSERT BY NAME can simplify your daily work:

Example Problem

You have a table SalesData with many columns, and the order of columns in your source data frequently changes. Manually matching these columns every time you insert data is time-consuming and error-prone.

Creating the Table:

Inserting Data with Traditional Method:

With traditional SQL, you must ensure the column order in your

INSERTstatement matches the table:Inserting Data with INSERT BY NAME:

Now, you can focus on the data rather than the order of columns:

Or even in any order:

Benefits in Daily Use

Reduced Complexity: No need to manually order columns, reducing mental overhead and potential for errors.

Improved Readability: SQL statements become more readable and maintainable.

Time Savings: Spend less time checking and rechecking column orders, speeding up development.

By using INSERT BY NAME, you can simplify your SQL data insertion tasks, making your daily work more efficient and less error-prone.

Link to Databricks Docs

4. Unnamed Parameter Markers in Databricks SQL

What are Unnamed Parameter Markers?

Unnamed Parameter Markers (?) are placeholders in SQL statements, allowing you to dynamically pass values during execution. This feature enhances query flexibility and security by preventing SQL injection attacks.

Why Use Unnamed Parameter Markers?

Dynamic Query Execution: Easily execute queries with varying parameters without changing the query structure.

Enhanced Security: Protect against SQL injection by using parameterized queries.

Simplified Code Maintenance: Reduce code complexity by reusing the same query structure with different values.

With Unnamed Parameter Markers, you can efficiently handle dynamic queries, improving both performance and security in Databricks SQL.

5. Run Federated Queries on Snowflake from Databricks

Running federated queries on Snowflake from Databricks is now in public preview! This powerful feature allows you to seamlessly query Snowflake data directly from Databricks, enabling a unified data analysis experience without moving data across platforms.

Setting Up Lakehouse Federation: Step-by-Step Guide

To run federated queries on Snowflake data, follow these steps to set up Lakehouse Federation in your Databricks environment:

Workspace Requirements:

Ensure your workspace is enabled for Unity Catalog.

Compute Requirements:

Ensure network connectivity from your Databricks Runtime cluster or SQL warehouse to Snowflake.

Use Databricks Runtime 13.3 LTS or above in shared or single-user access mode.

SQL warehouses must be Pro or Serverless.

Permissions:

To create a connection, you need to be a metastore admin or have the CREATE CONNECTION privilege.

To create a foreign catalog, you need CREATE CATALOG permission on the metastore and ownership or CREATE FOREIGN CATALOG privilege on the connection.

Execution Guide

Create a Security Integration in Snowflake (Optional)

If you prefer using single sign-on (SSO) for authentication, create a security integration in the Snowflake console:

Replace <integration-name>, <workspace-url>, and <duration-in-seconds> with your specific details.

Create a Connection

A connection specifies the path and credentials for accessing Snowflake. Use the following SQL commands in a Databricks notebook or SQL query editor:

Replace placeholder values with actual credentials and secure them using Databricks secrets.

Create a Foreign Catalog

A foreign catalog mirrors your Snowflake database in Unity Catalog, allowing you to manage and query Snowflake data:

Replace <catalog-name>, <connection-name>, and <database-name> with your specific details.

Supported Pushdowns

Federated queries on Snowflake support the following operations:

Filters

Projections

Limit

Joins

Aggregates (e.g., AVG, COUNT, MAX, MIN, SUM)

Functions (e.g., string, mathematical, date, time, and miscellaneous functions)

Window functions (e.g., DenseRank, Rank, RowNumber)

Sorting

Data Type Mappings

When querying Snowflake from Databricks, data types map as follows:

Snowflake decimal, number, numeric →

DecimalTypeSnowflake bigint, byteint, int, integer, smallint, tinyint →

IntegerTypeSnowflake float, float4, float8 →

FloatTypeSnowflake double, double precision, real →

DoubleTypeSnowflake char, character, string, text, time, varchar →

StringTypeSnowflake binary →

BinaryTypeSnowflake boolean →

BooleanTypeSnowflake date →

DateTypeSnowflake datetime, timestamp, timestamp_ltz, timestamp_ntz, timestamp_tz →

TimestampType

With Lakehouse Federation, you can leverage the power of Databricks and Snowflake together, providing a seamless and efficient way to query and analyze your data across platforms.

6. The ai_classify Function in Databricks SQL

What is ai_classify?

The ai_classify function leverages Databricks’ machine learning capabilities to classify text data directly within your SQL queries. It uses pre-trained models to identify and categorize text into predefined classes, streamlining the process of text classification without the need for external tools.

Why Use ai_classify?

Seamless Integration: Incorporate advanced machine learning directly into your SQL workflows.

Efficiency: Quickly classify large volumes of text data.

Flexibility: Apply pre-trained models to various classification tasks.

Example Use Case:

Suppose you have a table of product descriptions, and you want to classify each product into categories such as electronics, clothing, and home goods.

Explanation:

Creating the Table:

The code creates a table

customer_reviewsto store sample reviews.Each review has an

review_idandreview_text.

Inserting Data:

The table is populated with four different reviews.

Classifying Reviews:

The

ai_classifyfunction is used to classify the review text into categories using a specified machine learning model (feedback_modelin this case).

Output:

Results:

Using the ai_classify function, you can easily categorize product descriptions, enhancing your ability to organize and analyze product data. The function can be applied to various use cases such as inventory management, marketing campaigns, and more.

More Useful Example:

Let’s consider a scenario where you need to classify incoming customer emails into different support categories such as technical support, billing, and general inquiries.

Output:

Results:

By applying the ai_classify function to customer emails, you can automatically route emails to the appropriate support teams for more efficient handling and quicker resolution times. This leads to improved customer satisfaction and operational efficiency.

Conclusion

We’ve highlighted several Databricks SQL features that offer significant quality-of-life improvements for data engineers and analysts. From the flexibility of the Variant data type to the efficiency of named parameters and the intuitive nature of INSERT BY NAME, each feature brings a unique advantage to your data workflows. Leveraging these tools not only streamlines your processes but also enhances the performance and maintainability of your SQL queries, empowering you to achieve more with your data.