Jun 16, 2024

All about Databricks data + ai summit 2024

Major features that were released from the Data + AI Summit 2024

The Data + AI Summit 2024 was a landmark event, packed with transformative announcements from Databricks. It has introduced an array of groundbreaking updates and features that promise to redefine the landscape of data engineering, analytics, and artificial intelligence. Let's dive into the major announcements and what they mean for the industry. Here are the key highlights and how you can use them in your project:

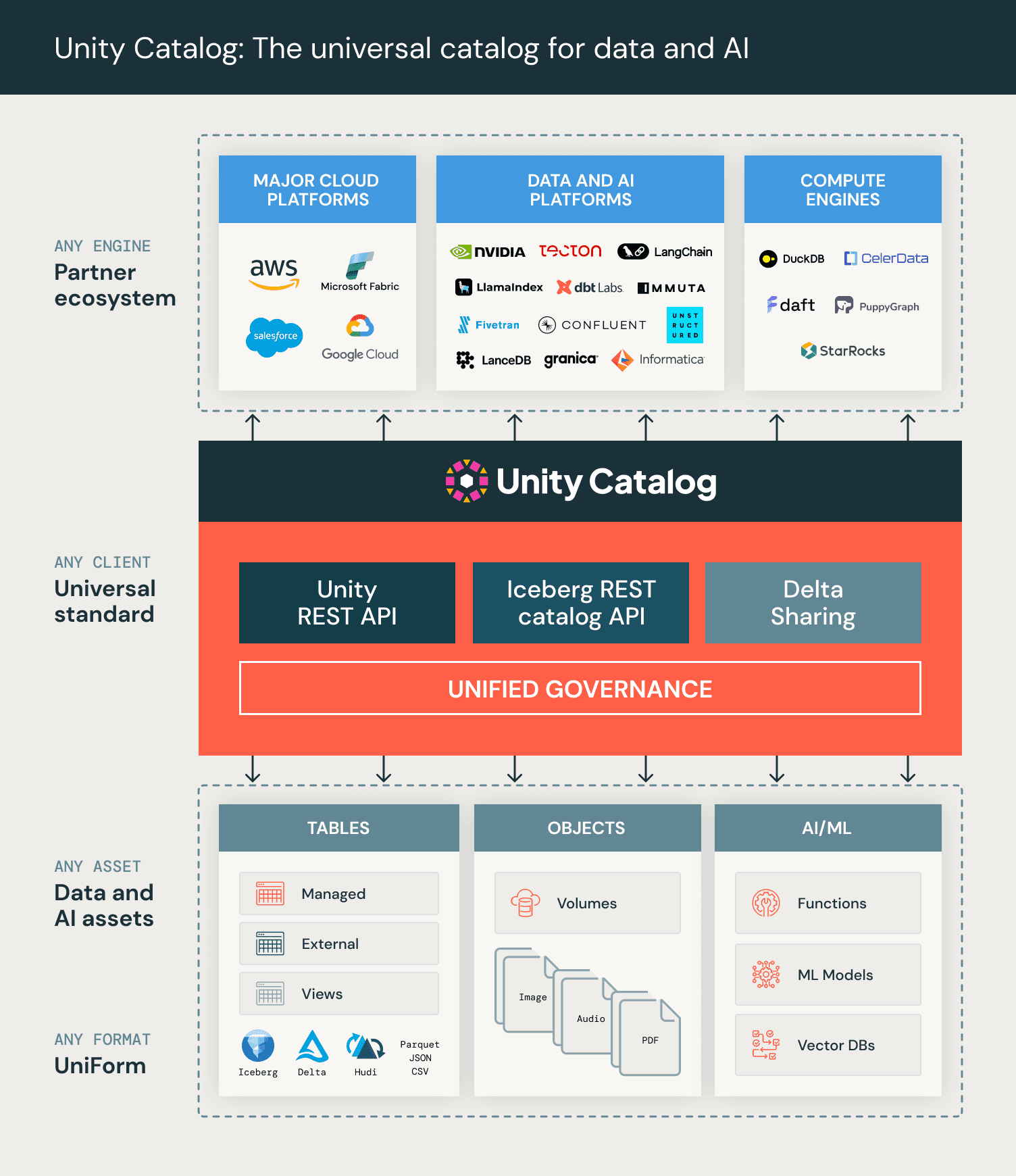

1. Open Sourcing Unity Catalog

Databricks has taken a significant step by open-sourcing Unity Catalog. This move aims to democratize data governance, allowing broader access and collaboration across various platforms. Unity Catalog now supports comprehensive governance, data lineage, and improved access controls, enhancing data management capabilities across organizations. You can checkout Open sourced Unity catalog here.

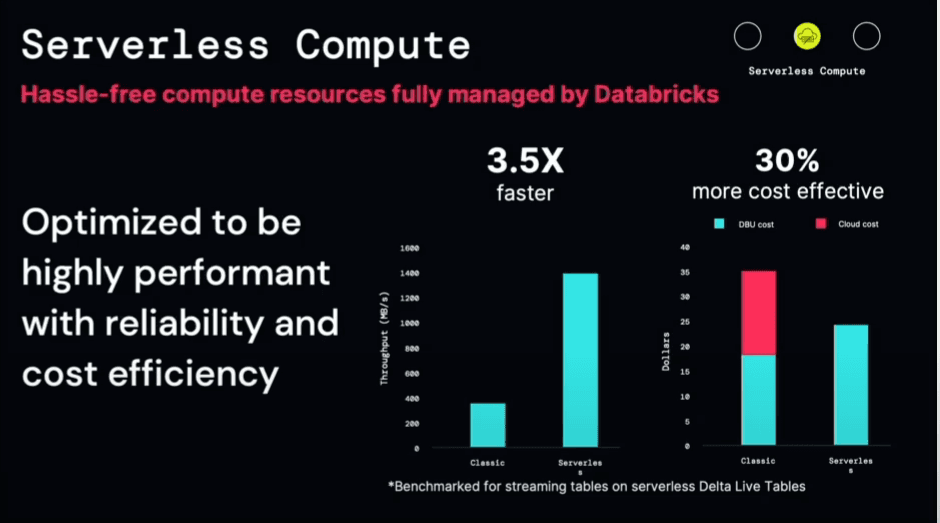

2. Databricks Goes 100% Serverless

In a game-changing move, Databricks announced its transition to a fully serverless platform. This shift simplifies infrastructure management, offering elastic scalability, reduced operational overhead, and cost efficiency. The serverless architecture ensures optimal performance and agility for data engineering and analytics workloads.

Understanding Databricks Serverless Compute

Serverless computing in Databricks removes the need for manual cluster management, allowing users to run applications without worrying about infrastructure. Resources are allocated dynamically based on demand, ensuring optimal performance and cost efficiency. This model follows a pay-per-use approach, where companies only pay for the resources they actually use.

Impact on Businesses

Before Serverless:

High upfront costs for cluster setup.

Underutilization of resources leading to inefficiencies.

Need for technical expertise to manage clusters.

After Serverless:

Cost Savings: Small businesses can significantly reduce costs as they only pay for the computing resources they use. The pay-per-use model eliminates the need for investing in underutilized clusters.

Ease of Use: Serverless architecture abstracts the complexity of infrastructure management. This allows small teams, often with limited technical resources, to focus on data analysis and insights rather than managing and maintaining clusters.

Scalability: Serverless services provide the flexibility to scale operations up or down based on demand, enabling small businesses to handle varying workloads efficiently.

Operational Efficiency: Midsize companies can streamline operations by eliminating the overhead associated with cluster management. This improves operational efficiency and allows IT teams to focus on more strategic tasks.

3. Delta Lake 4.0 and Apache Spark 4.0

The release of Delta Lake 4.0 brings major performance enhancements, including Delta Lake UniForm, which ensures interoperability with Apache Iceberg and Hudi. Spark 4.0, on the other hand, introduces new features for improved data processing and analytics. These updates aim to provide faster query execution, streamlined data integration, and enhanced data reliability.

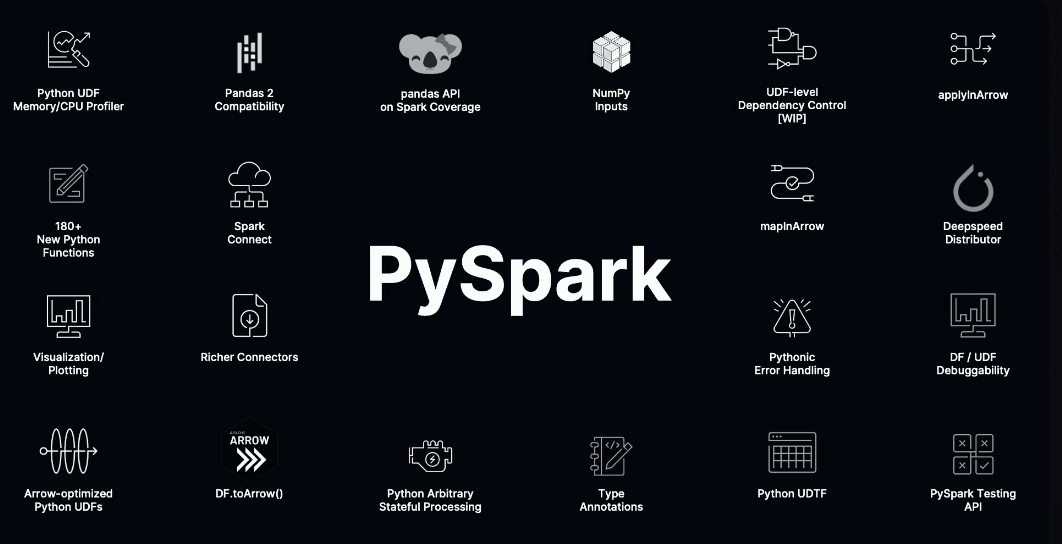

Quote by Reynold Xin Co-founder Databricks : Python is the first class language for Spark.

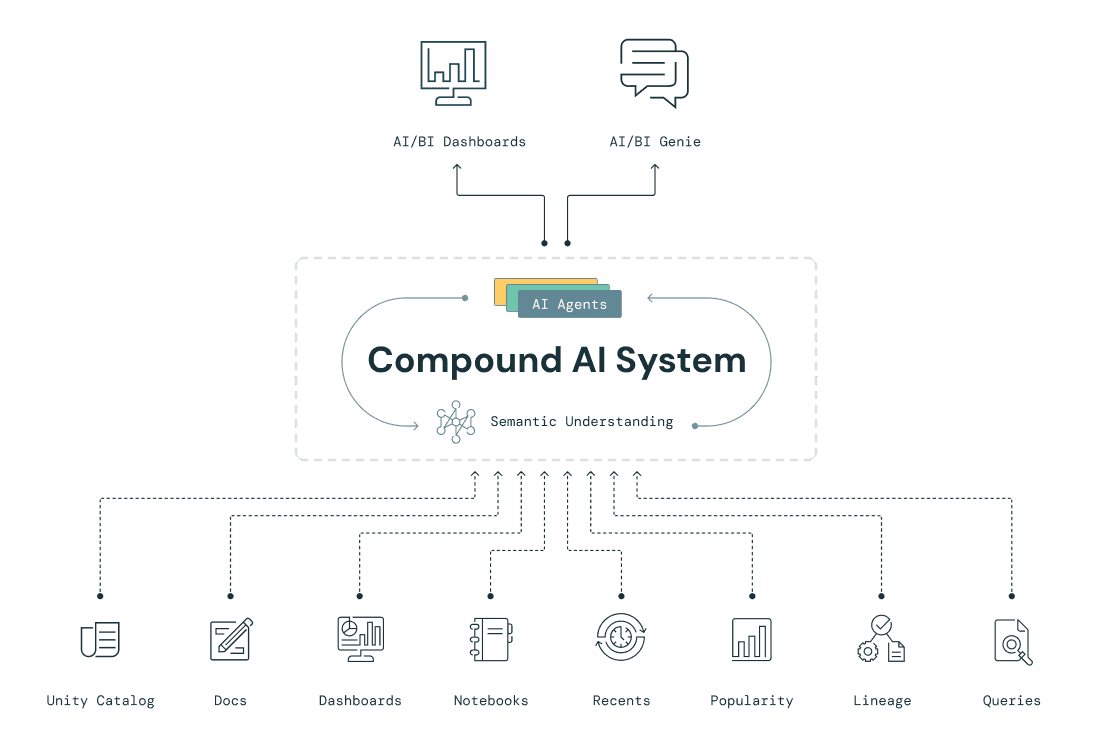

4. Databricks AI/BI Dashboard and Genie

At the Data + AI Summit 2024, Databricks made several groundbreaking announcements, notably in the realm of AI and BI with the introduction of new AI/BI Dashboards and the Genie platform. These tools are set to revolutionize how businesses interact with and gain insights from their data.

AI/BI Dashboards

The new AI/BI Dashboards from Databricks are designed to provide intelligent analytics in real-time, enhancing decision-making capabilities across organizations. These dashboards leverage advanced AI to automate and optimize data visualization, allowing users to generate insights more quickly and accurately. Key features include:

Conversational Analytics: Users can interact with their data using natural language queries, making it easier for non-technical stakeholders to extract meaningful insights without deep technical knowledge.

Automated Insights: The dashboards automatically identify trends, anomalies, and key metrics, providing users with proactive insights and reducing the need for manual analysis.

Customizable Visualizations: With a wide array of visualization options, users can customize their dashboards to suit their specific needs, ensuring that the most relevant data is always front and center.

Genie Platform

The Genie platform takes AI-driven analytics a step further by introducing conversational interfaces and intelligent agents that understand and learn from your business context. Key highlights include:

Natural Language Processing (NLP): Genie allows users to ask questions and get answers in natural language, making data interaction as simple as having a conversation. This feature is powered by advanced NLP models that understand the nuances of business terminology and context.

AI Agents: These agents learn and remember the specifics of your business, providing more accurate and contextually relevant responses over time. This continuous learning capability ensures that the insights generated are always aligned with your business goals.

Unified Governance: Built-in governance and security features ensure that all data interactions and insights comply with your organization’s policies and standards, leveraging the Unity Catalog for robust data management.

You can checkout Databricks products page to know more about AI/BI Genie and Dashboard here.

5. Databricks Mosaic AI Agent and Compound Modelling

The Mosaic AI Agent and Compound Modelling tools are designed to enhance AI workflows. These tools support complex model building, allowing for more sophisticated AI applications. Mosaic facilitates the integration of various AI models and tools, enabling more efficient and effective AI development.

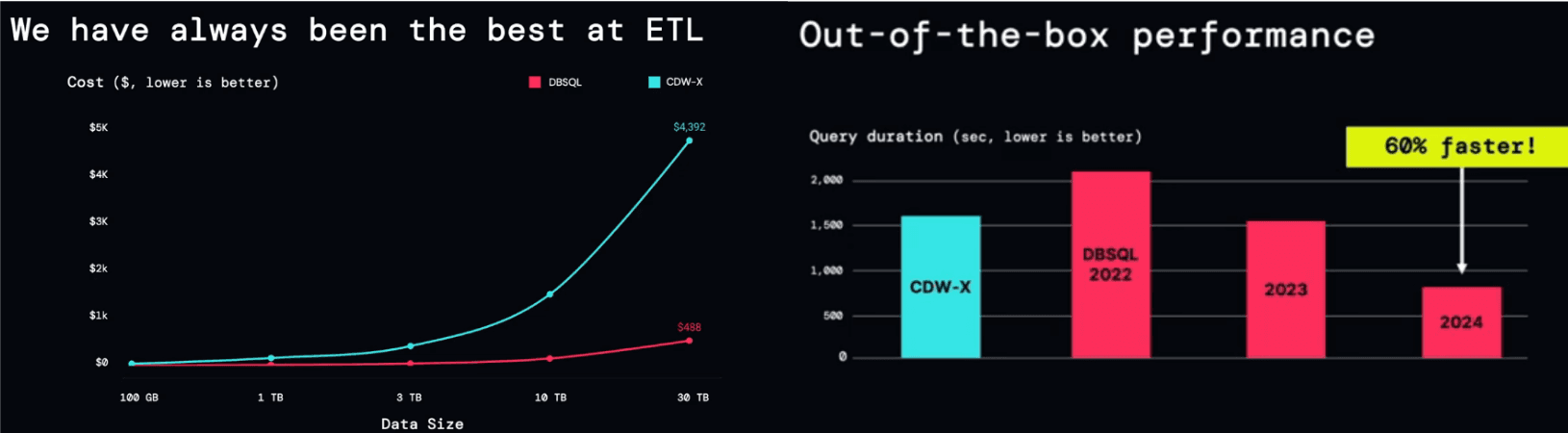

6. Databricks SQL Enhancements

Significant updates to Databricks SQL were announced, focusing on improving query performance and expanding SQL capabilities. These enhancements include support for new data types, improved query optimization, and expanded integration with BI tools, ensuring a more powerful and flexible SQL experience for users.

Out-of-the-Box Performance

The second image demonstrates the impressive improvements in query performance over the past three years. Databricks SQL 2024 is now 60% faster compared to its previous versions, significantly outperforming CDW-X:

In 2022, Databricks SQL's query duration was considerably higher than CDW-X.

By 2023, improvements had brought it closer, but it was the 2024 enhancements that made the difference, making Databricks SQL 60% faster than its 2023 version.

These enhancements have drastically reduced query times, allowing users to achieve quicker insights and improved operational efficiency.

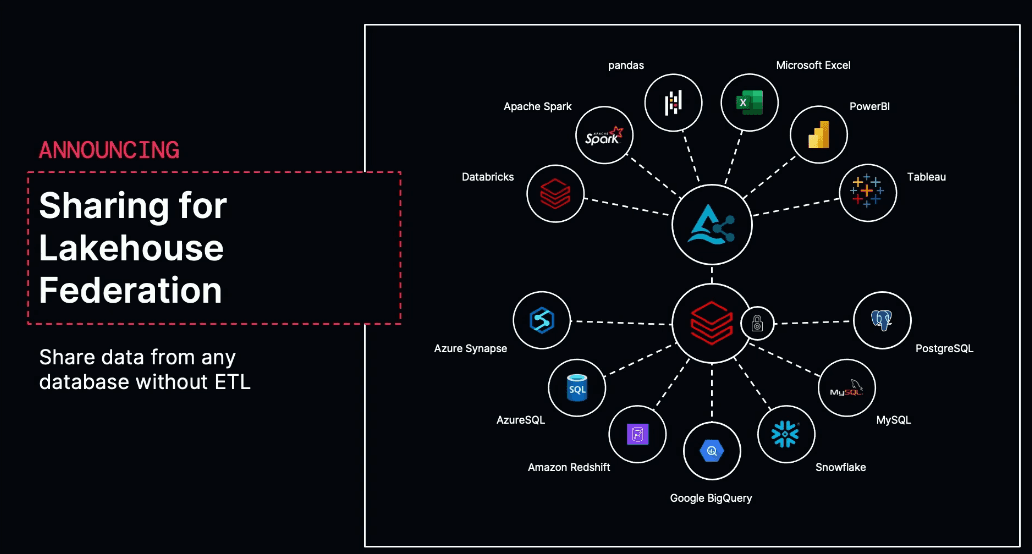

7. Lakehouse Federation

Databricks introduced Lakehouse Federation capabilities, which integrate with Hive and AWS Glue. This feature allows seamless querying across multiple data sources and unifies data management under a single governance framework. It simplifies data access and enhances data discoverability across different platforms.

8. Delta Sharing and Lakehouse Federation

Delta Sharing has been updated to support Lakehouse Federation, enabling more extensive data sharing capabilities. This enhancement allows organizations to share data securely and efficiently across various platforms and environments, fostering greater collaboration and data utilization.

9. Variant Format for JSONs

The new variant format for JSONs enhances the handling of semi-structured data. This update improves the performance of JSON data processing and supports more complex data transformations, making it easier to work with diverse data types.

10. Clean Rooms

Databricks introduced clean rooms, which provide secure data collaboration environments. These clean rooms allow multiple parties to collaborate on data without exposing sensitive information, ensuring privacy and compliance while enabling advanced data analysis.

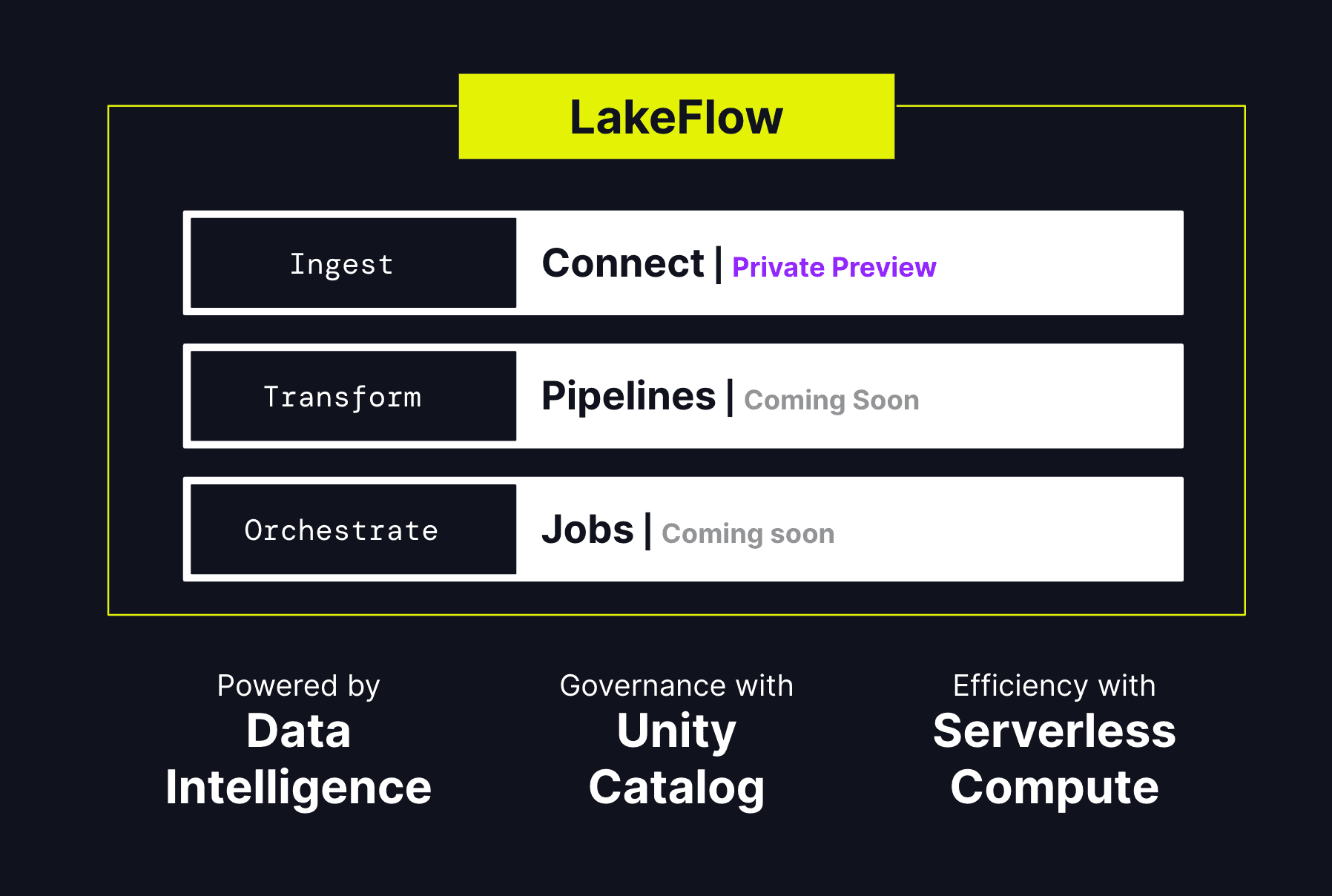

11. Databricks Lakeflow

The new Lakeflow feature simplifies data orchestration and workflow management across the lakehouse. It provides tools for creating, scheduling, and monitoring data pipelines, ensuring efficient data movement and processing within the lakehouse architecture.

What is Databricks Lakeflow?

Databricks Lakeflow streamlines and automates data workflows within the Databricks platform, enhancing orchestration of data engineering, machine learning, and analytics pipelines.

Key Components of Lakeflow:

LakeFlow Connect: Seamlessly integrate various data sources into your lakehouse, ensuring real-time data flow and consistent data quality.

LakeFlow Pipelines: Automate complex ETL processes, providing a robust framework for data transformation and enrichment.

LakeFlow Jobs: Schedule and orchestrate data workflows, enabling automated data processing and reducing manual intervention.

These announcements underscore Databricks' commitment to advancing data and AI capabilities, providing organizations with the tools they need to innovate and scale their data operations effectively.